Red Hat and Netronome will address the growing demand for higher performance from the NFV datapath

NFV applications running on virtualized infrastructure in OpenStack today must achieve 10G linerate. Mobile application VNF vendors demanding higher speeds with 25G, 40G & 100G links to server.

Accelerated datapath options today and what lies ahead

- SR-IOV (in deployment) - bare-metal performance, switching operations (filtering, tunneling, bonding) requires external switch(ToR), VM-to-VM requires hair-pinning at ToR

- DPDK accelerated openvswitch (OVS-DPDK) (PoC trials) - multiple cores to achieve 10G line rate; vswitch supports bonding, firewall, conntrack, tunneling, live migration, but at extra cost

- SmartNIC Offload (PoC trials) - offload of OVS flows from vswitch to a SmartNIC eswitch; support for OVS flow match/action, firewall filtering, tunneling, bonding, NAT, conntrack. Selective acceleration with fallback to vswitch(slow-path). Bare-metal performance, no overhead

Switch vendors have always used hardware offload with smart ASICs. With the arrival of smartNIC, NFV usecases can get the best of both worlds, hardware acceleration with feature rich software while increasing efficiency

What will be covered?

- Deployment progression of NFV datapath from SR-IOV to OVS-DPDK to HW offload

- Topologies for HW offload: SR-IOV with OVS offload, bonding, firewall conntrack usecase, live migration, partial vs full offload, OVS kernel vs OVS-DPDK offload.

- Initial performance benchmarks - SR-IOV vs OVS-DPDK vs HW-Offload

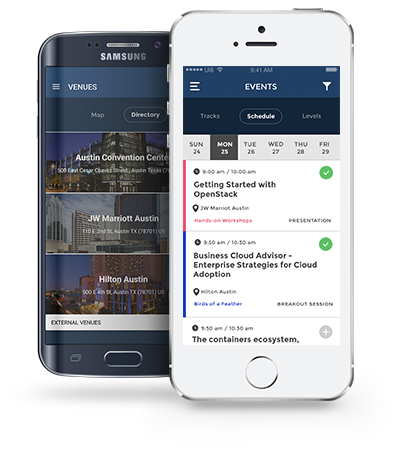

- Integration in Openstack - SR-IOV to NIC with vSwitch fallback, selective flow offload, implications to Nova, Neutron, heat orchestration

- Limitation of HW offload- vswitch slow-path is slower, max flow capacity, conntrack/NAT support, max 5-tuple filters, prioritizing flows to offload

- Future of HW offload - SDN controllers OVN & ODL, programmability (eBPF, P4)